1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| [iio@192 bin]$ ./logstash -f ../config/logstash-elastic.yml

Sending Logstash logs to /usr/elastic/logstash/logs which is now configured via log4j2.properties

[2020-03-01T18:26:42,445][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2020-03-01T18:26:42,638][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.6.0"}

[2020-03-01T18:26:45,170][INFO ][org.reflections.Reflections] Reflections took 46 ms to scan 1 urls, producing 20 keys and 40 values

[2020-03-01T18:26:47,179][INFO ][logstash.outputs.elasticsearch][main] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.237.11:9200/]}}

[2020-03-01T18:26:47,487][WARN ][logstash.outputs.elasticsearch][main] Restored connection to ES instance {:url=>"http://192.168.237.11:9200/"}

[2020-03-01T18:26:47,553][INFO ][logstash.outputs.elasticsearch][main] ES Output version determined {:es_version=>7}

[2020-03-01T18:26:47,561][WARN ][logstash.outputs.elasticsearch][main] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2020-03-01T18:26:47,760][INFO ][logstash.outputs.elasticsearch][main] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//192.168.237.11:9200"]}

[2020-03-01T18:26:47,855][INFO ][logstash.outputs.elasticsearch][main] Using default mapping template

[2020-03-01T18:26:47,900][WARN ][org.logstash.instrument.metrics.gauge.LazyDelegatingGauge][main] A gauge metric of an unknown type (org.jruby.specialized.RubyArrayOneObject) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[2020-03-01T18:26:47,914][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/elastic/logstash/config/logstash-elastic.yml"], :thread=>"#<Thread:0x77e7dd14 run>"}

[2020-03-01T18:26:48,011][INFO ][logstash.outputs.elasticsearch][main] Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}

[2020-03-01T18:26:49,458][INFO ][logstash.inputs.file ][main] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/elastic/logstash/data/plugins/inputs/file/.sincedb_b626b2bdb9f76816ac98ff32e97c96bf", :path=>["/usr/elastic/logs/diy.log"]}

[2020-03-01T18:26:49,506][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2020-03-01T18:26:49,600][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2020-03-01T18:26:49,622][INFO ][filewatch.observingtail ][main] START, creating Discoverer, Watch with file and sincedb collections

[2020-03-01T18:26:50,082][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

/usr/elastic/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

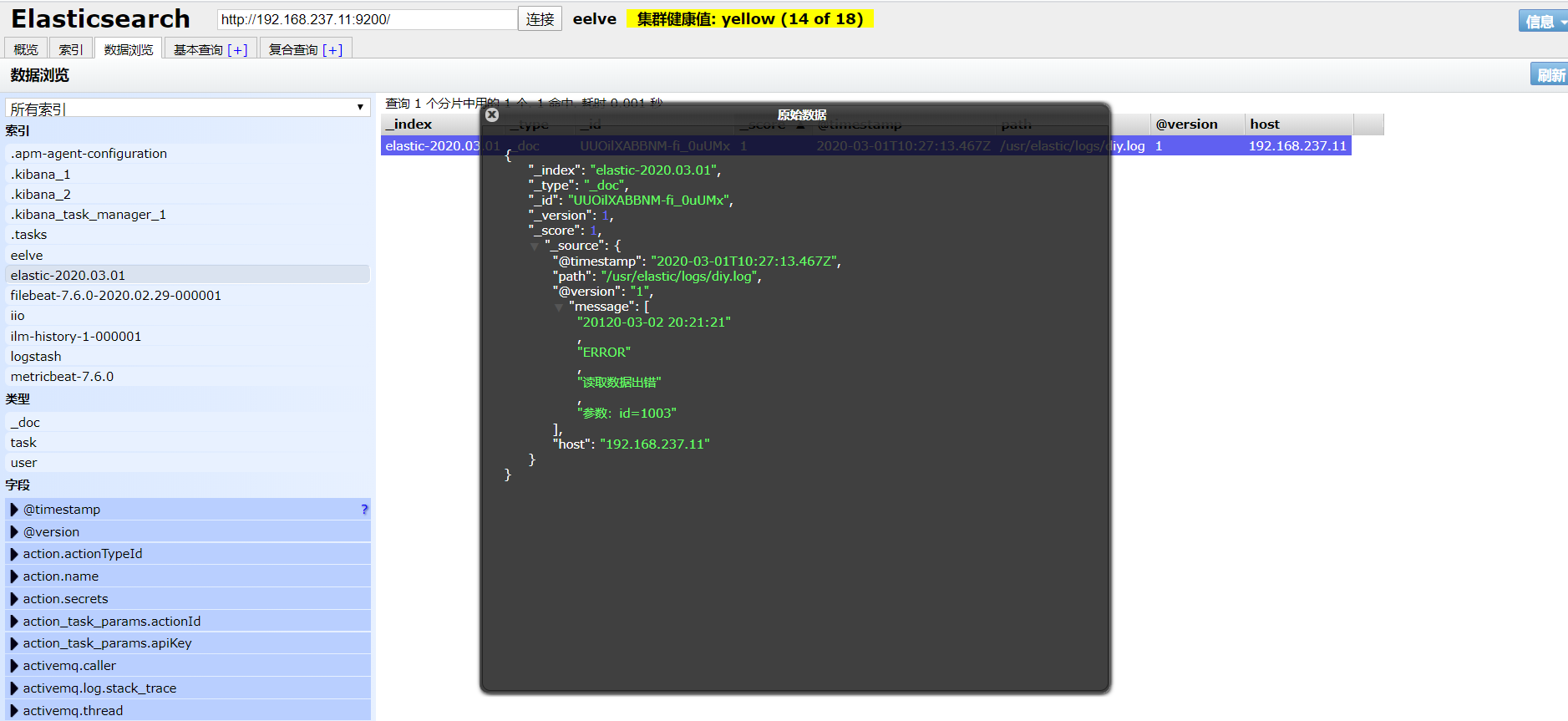

{

"@timestamp" => 2020-03-01T10:27:13.467Z,

"path" => "/usr/elastic/logs/diy.log",

"@version" => "1",

"message" => [

[0] "20120-03-02 20:21:21",

[1] "ERROR",

[2] "读取数据出错",

[3] "参数:id=1003"

],

"host" => "192.168.237.11"

}

|